Revolutionizing Recommendations: A Deep Dive into Graph Neural Networks

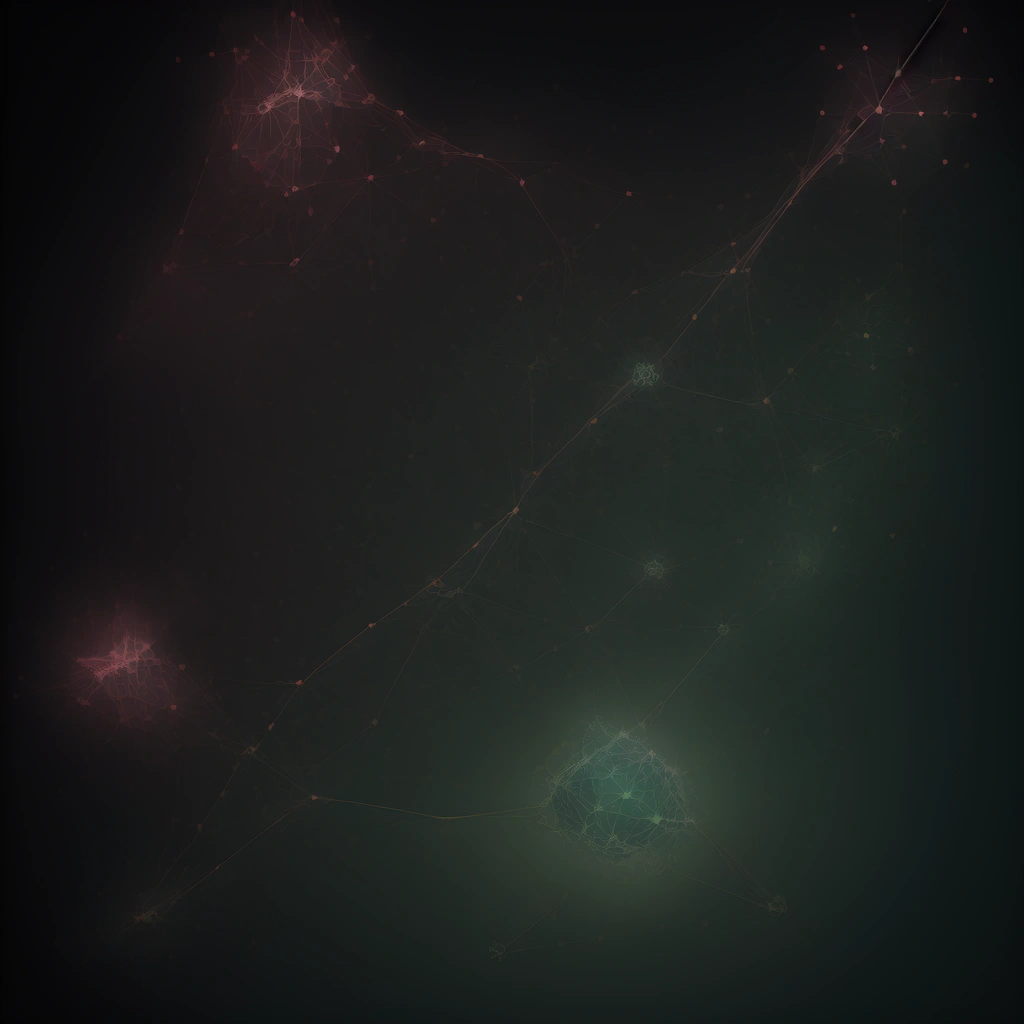

The Rise of GNNs in Recommendation: A New Era of Personalization

The relentless pursuit of personalized experiences has propelled recommendation systems to the forefront of technological innovation. From suggesting the next must-watch show on streaming services to curating tailored product lists on e-commerce platforms, these systems shape our digital interactions daily. While traditional methods like collaborative filtering have served as the bedrock for years, a new wave of techniques is emerging, promising unprecedented accuracy and adaptability. Enter Graph Neural Networks (GNNs), a deep learning paradigm poised to revolutionize the field.

As Intel rolls out its 5th Gen Xeon Scalable processors, optimized for AI workloads, the computational barriers to deploying complex GNN models are shrinking, making them increasingly viable for real-world applications. Furthermore, with insights from entrepreneurs like Ann Marie Puig emphasizing resilience and scalability in business startups, the need for robust and adaptable recommendation systems becomes even more critical. GNN Recommendation Systems are rapidly gaining traction, offering a more nuanced approach to personalization compared to traditional methods.

Unlike collaborative filtering, which primarily relies on user-item interaction history, GNNs leverage the underlying graph structure of user and item relationships. This allows a GNN Recommendation Engine to capture complex dependencies and generate more accurate and relevant Personalized Recommendations. For instance, consider a social networking platform where users are connected through friendships. A GNN can analyze this network to identify users with similar interests, even if they haven’t directly interacted with the same content, leading to more effective recommendations.

The power of GNNs lies in their ability to perform reasoning over graphs, enabling them to infer hidden patterns and relationships. Graph Convolutional Networks (GCNs), a specific type of GNN, are particularly well-suited for recommendation tasks. These networks propagate information across the graph, aggregating features from neighboring nodes to learn representations for users and items. Frameworks like PyTorch Geometric and DGL simplify the implementation of GNNs, providing tools for constructing and training these models efficiently.

The development of these tools has fueled the growth of Deep Learning Recommendation Systems, making them more accessible to researchers and practitioners alike. The AI Recommendation Engine is constantly evolving with GNNs at the helm. Beyond accuracy, GNNs offer improved explainability in recommendation generation, a crucial aspect for building user trust. By visualizing the paths within the graph that led to a particular recommendation, we can understand the reasoning behind it. For example, a GNN might recommend a movie to a user because their friends have watched and enjoyed similar films, and these friends share common interests based on their past viewing history. This transparency contrasts with the ‘black box’ nature of some other deep learning models, fostering greater confidence in the recommendations. As GNNs continue to mature, their ability to provide both accurate and explainable recommendations will solidify their position as a leading technology in the field.

GNNs vs. Traditional Recommendation Algorithms: A Paradigm Shift

GNNs represent a significant departure from traditional recommendation algorithms. Collaborative filtering, for example, relies primarily on user-item interaction data, often overlooking the rich relational information embedded within the user and item networks. GNNs, on the other hand, explicitly model these relationships by representing users and items as nodes in a graph, with edges denoting interactions or similarities. This graph structure allows GNNs to learn complex patterns and dependencies that would be invisible to conventional methods.

The core advantage lies in GNNs’ ability to perform message passing between nodes. Each node aggregates information from its neighbors, effectively capturing the influence of related users and items. This process is repeated over multiple layers, allowing the network to learn increasingly sophisticated representations. Compared to matrix factorization or content-based filtering, GNNs offer superior handling of cold-start problems (new users or items with limited interaction data) and can incorporate diverse sources of information, such as social networks, item attributes, and user profiles.

The shift from traditional methods to GNN Recommendation Systems is akin to moving from static maps to real-time, interactive navigation. Traditional recommendation systems, while effective to a degree, often treat user-item interactions as isolated events. A GNN Recommendation Engine, however, leverages the interconnectedness of users and items to create a more holistic understanding. For instance, a Deep Learning Recommendation Systems approach using GNNs can identify subtle patterns like a user’s preference for movies with similar actors or directors, even if they haven’t explicitly watched those movies before.

This is achieved through Graph Convolutional Networks, a specific type of GNN, that excels at extracting features from graph-structured data. Furthermore, the flexibility of GNNs allows for the seamless integration of various data sources. Unlike traditional algorithms that might struggle to combine user profiles, item descriptions, and social network data, GNNs can readily incorporate these diverse inputs into the graph structure. This capability is particularly valuable in scenarios where rich contextual information is available. Consider an e-commerce platform where GNNs can leverage not only purchase history but also product categories, user reviews, and even social connections to generate highly Personalized Recommendations.

Frameworks like PyTorch Geometric (PyG) and DGL (Deep Graph Library) provide powerful tools for building and training GNNs, making it easier for data scientists to implement these advanced techniques. In essence, GNNs are revolutionizing the field of Recommendation Systems by moving beyond simple user-item interactions and embracing the complex relationships that define our digital world. The ability of an AI Recommendation Engine powered by GNNs to capture these nuances translates into more accurate, relevant, and personalized recommendations, ultimately enhancing user experience and driving business value. As graph data becomes increasingly prevalent, the adoption of GNNs in recommendation systems is poised to accelerate, solidifying their position as a cornerstone of modern AI-driven personalization.

Step-by-Step Guide: Building a GNN Recommendation System with PyTorch Geometric

Building a GNN-based recommendation system involves several key steps. Let’s consider using PyTorch Geometric (PyG) for implementation. First, data preparation is crucial. This involves constructing the user-item interaction graph, where nodes represent users and items, and edges represent interactions (e.g., purchases, ratings). Features can be added to both nodes and edges to enrich the graph representation. For instance, user nodes might include demographic information, while item nodes could contain product descriptions. Edge features could represent the timestamp of the interaction or the rating given by the user.

Next, define the GNN model architecture. A common choice is Graph Convolutional Networks (GCNs) or GraphSAGE. These models learn node embeddings by aggregating information from their neighbors. The number of layers, the aggregation function, and the embedding dimension are important hyperparameters to tune. For training, a loss function such as Bayesian Personalized Ranking (BPR) or binary cross-entropy is typically used. The model is trained to predict the likelihood of a user interacting with a given item.

Finally, evaluate the model’s performance on a held-out test set. The code implementation would involve loading the data into a PyG `Data` object, defining the GNN model using PyG’s modules, and training the model using an optimizer like Adam. Regularization techniques, such as dropout, can help prevent overfitting. Data preprocessing for a GNN Recommendation Engine extends beyond simple graph construction. Feature engineering plays a pivotal role in enhancing the model’s predictive power. Consider incorporating techniques like embedding lookups for categorical features, transforming raw text data into meaningful representations using pre-trained language models, and normalizing numerical features to ensure stable training.

Furthermore, handling cold-start problems—where new users or items lack sufficient interaction data—requires careful consideration. Techniques such as content-based feature injection, meta-learning, or transfer learning from auxiliary datasets can mitigate these challenges and improve the robustness of the Personalized Recommendations. The selection of the GNN architecture is a critical decision point. While Graph Convolutional Networks (GCNs) offer a foundational approach to aggregating neighborhood information, other architectures like GraphSAGE, GAT (Graph Attention Networks), and newer variants provide alternative mechanisms for learning node embeddings.

GraphSAGE, for example, employs sampling techniques to handle large neighborhoods, making it suitable for scalable GNN Recommendation Systems. GAT introduces attention mechanisms, allowing the model to weigh the importance of different neighbors during aggregation. Experimenting with different architectures and their hyperparameters is essential to optimize performance for a specific recommendation task. Frameworks like DGL (Deep Graph Library) provide excellent alternatives to PyTorch Geometric, offering similar functionalities with potentially different performance characteristics. Beyond model architecture and data preparation, the training process itself demands careful attention.

Optimizing the loss function, selecting an appropriate optimizer (e.g., Adam, SGD), and tuning hyperparameters are crucial steps. Techniques like learning rate scheduling, early stopping, and gradient clipping can help stabilize training and prevent overfitting. Furthermore, the choice of negative sampling strategy significantly impacts the model’s performance. Hard negative sampling, where the model is trained on challenging negative examples, can lead to improved accuracy. Regularization techniques, such as dropout and weight decay, are also essential for preventing overfitting and improving the generalization ability of the AI Recommendation Engine and Deep Learning Recommendation Systems.

Scaling GNNs: Techniques for Handling Large Datasets

Handling large-scale datasets presents a significant challenge for GNN-based recommendation systems. The computational complexity of GNNs can increase dramatically with the size of the graph, hindering real-time personalized recommendations. Several techniques can be employed to address this issue and ensure the GNN recommendation engine remains performant. One common approach is mini-batch training, where the graph is divided into smaller subgraphs for processing. PyTorch Geometric (PyG) provides excellent tools for efficient mini-batch sampling, allowing for optimized memory usage and faster iteration times.

This is particularly useful when dealing with graphs containing millions or even billions of nodes and edges, a common scenario in large e-commerce platforms or social networks. For example, a large retailer might use mini-batch training to process only a subset of user-product interactions when updating recommendations, significantly reducing the computational burden. Another technique involves node sampling, where only a subset of nodes and their neighbors are considered during each iteration. This reduces the computational cost without sacrificing too much accuracy, enabling the GNN to focus on the most relevant parts of the graph for a given user or item.

This is a critical optimization for Deep Learning Recommendation Systems. Graph partitioning offers another effective strategy, where the graph is divided into multiple partitions that can be processed in parallel. Distributed training frameworks, such as DGL (Deep Graph Library), can be used to distribute the computation across multiple machines, drastically reducing training time. Consider a scenario where a streaming service with millions of users and movies needs to train a GNN recommendation model. By partitioning the user-movie interaction graph and distributing the training across a cluster of machines, the service can significantly accelerate the model training process and deliver more timely and relevant Personalized Recommendations.

Furthermore, feature selection and dimensionality reduction techniques can help reduce the size of the node and edge features, improving scalability. For instance, Principal Component Analysis (PCA) can be applied to reduce the dimensionality of user profile features, leading to faster computation without significantly impacting recommendation accuracy. These techniques are crucial for maintaining the efficiency of the AI Recommendation Engine. Beyond these techniques, specialized hardware and algorithmic optimizations play a crucial role. Graph Convolutional Networks (GCNs), a specific type of GNN, can be optimized using techniques like layer sampling and feature aggregation to reduce memory footprint and computational cost.

Moreover, the new Intel Xeon Scalable processors, particularly the 4th and 5th generations, offer enhanced performance for AI and graph processing workloads, making them ideal for deploying large-scale GNN recommendation systems. These processors include specialized instructions for accelerating matrix multiplication and other operations commonly used in GNNs. Furthermore, research into approximate nearest neighbor search algorithms can help speed up the retrieval of similar users or items, further enhancing the scalability of GNN Recommendation Systems. Efficiently scaling GNNs is not just about handling larger datasets; it’s about enabling more complex and nuanced models that can capture the intricate relationships between users and items, leading to more accurate and personalized recommendations.

Evaluation, Fine-Tuning, and Real-World Applications: Unleashing the Full Potential of GNNs

Evaluating and fine-tuning GNN recommendation models is crucial for achieving optimal performance. Common evaluation metrics include precision, recall, NDCG (Normalized Discounted Cumulative Gain), and MAP (Mean Average Precision). These metrics measure the accuracy and ranking quality of the recommendations delivered by the AI Recommendation Engine. Hyperparameter optimization is essential for fine-tuning the model, ensuring that the Graph Neural Networks Recommendation Systems operate at peak efficiency. Techniques such as grid search, random search, and Bayesian optimization can be used to find the best combination of hyperparameters.

The learning rate, the number of layers in the Graph Convolutional Networks, the embedding dimension, and the regularization strength are all important hyperparameters to tune for a GNN Recommendation Engine. Real-world case studies demonstrate the effectiveness of GNNs in various recommendation scenarios. For example, Pinterest has successfully deployed GNNs to enhance Personalized Recommendations, leveraging the platform’s rich graph structure of pins and boards. Their implementation, often discussed in academic circles and industry blogs, showcases the ability of Deep Learning Recommendation Systems to capture nuanced relationships between user interests and content.

Similarly, in e-commerce, companies like Alibaba have utilized GNNs to recommend products based on purchase history and product relationships, leading to significant improvements in click-through rates and conversion rates. These deployments often utilize frameworks like PyTorch Geometric or DGL for efficient graph processing. While GNNs offer significant advantages for Recommendation Systems, they also present challenges. The computational expense of training, particularly on large graphs with millions of nodes and edges, is a major concern. Furthermore, GNNs can be sensitive to hyperparameter choices and the quality of the input graph. Addressing these challenges requires careful attention to data preprocessing, feature engineering, and model architecture design. Techniques like graph sampling and distributed training are often employed to scale GNNs to handle massive datasets. Future research directions include developing more efficient GNN architectures and exploring methods for incorporating external knowledge into the graph structure. As the field evolves, we can expect to see even more innovative and practical applications of GNNs in recommendation and beyond.